SIDS: Reference - Neuronal Noise

http://www.scholarpedia.org/article/Neuronal_noise

[cited: Andre Longtin (2013) Neuronal noise. Scholerpedia, 8 (9):1618., revision #135875]Curator: Andre Longtin

Andre Longtin, Physics Department, University of Ottawa, Ottawa, Canada

Neuronal noise is a general term that designates random influences on the transmembrane voltage of single neurons and by extension the firing activity of neural networks. This noise can influence the transmission and integration of signals from other neurons as well as alter the firing activity of neurons in isolation.

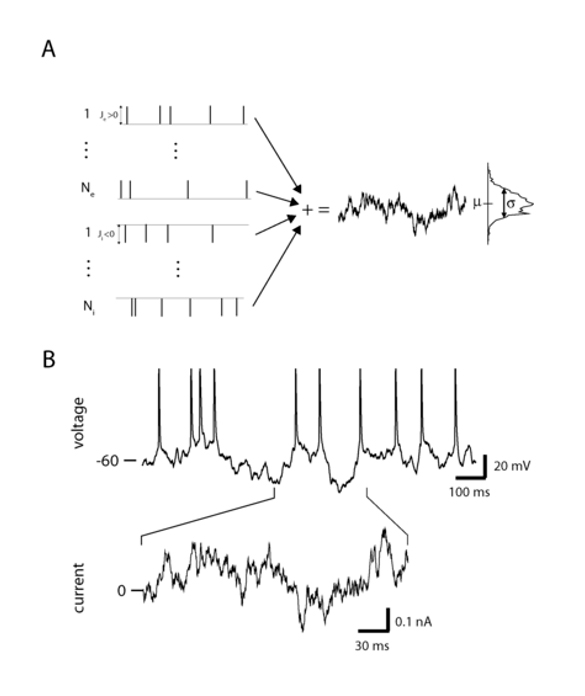

Figure 1: Noise driven neurons. A. Synaptic bombardment of Neexcitatory and Ni inhibitory pre-synaptic current pulses, where the pulse strengths are Je and Ji respectively. Each pulse train is a Poisson process, with firing rate νe and νi respectively. In the limit of large Neνe and Niνi we represent the summed process as a white noise process (right panel). B. The postsynaptic membrane and spike response of a real cortical neuron recorded in vitro driven by filtered white noise current in a whole cell patch clamp experiment. The current used to drive the membrane response is shown for a specific time section of the membrane response (bottom). Experiments performed by Jaime de la Rocha with details given in De la Rocha et al. 2007.

Introduction

This article presents a concise overview of basic concepts and tools for understanding the random features of neural activity. More details can be found in the suggested references.

Neural response variability

A single neuron exhibits different responses to repeated presentations of a specific input signal. This response variability or noisiness and its consequences for neural network function has been under scrutiny for many decades (Schmitt 1970, DeFelice 1981, Holden 1976). This is also true of firing patterns without input signals, i.e. spontaneous activity, which display varying degrees of randomness. At one extreme lie pacemaker cells, which fire almost periodically in spite of intrinsic sources of noise. At the other are cortical pyramidal cells with highly irregular firing; they almost embody the mathematical notion of a renewal process whose successive time intervals between firings are vanishingly correlated. For such cells, noise sources are coupled to the neuron’s dynamicsin a way that strongly influences the firing activity.

Noise and nonlinearity

This view that the main measure of the action of noise is seen in neuronal firing variability is commonplace. However, noise acts on many different spatial and temporal scales in single neurons, from the molecular noise involved in genetic transcription and translation that modifies ion channel densities, all the way up to firing activity on the scale of the whole brain as seen in EEG recordings and behavioral outputs (Swain and Longtin, 2006; Deco et al. 2012). Given the number of nonlinear processes in the nervous system, and the rapidly evolving physics and mathematics literature on the nontrivial effects that noise can have in nonlinear systems, it is not surprising that stochastic neuronal dynamics are a particularly active area of research (Lindner et al. 2004).

Dynamics versus noise

In any experimental or modeling effort, it is a challenge to unambiguously identify and disentangle noise sources. Indeed, noise in one system may be considered the signal or dynamics in another system, or at another spatiotemporal scale. For example, determining how ion channels fluctuate between open and closed states (Chow and White 2000; White et al. 2000) requires a description on the nanoscale, while coarser descriptions involving different mathematical formalisms can explain the impact of such fluctuations on single neuron firing. Further, the identification of noise sources raises central questions about cellular and systems design: is noise a hindrance, potentially degrading the function of single cells and networks, or is it a source of variability that cells advantageously exploit (Stein et al. 2005, Gammaitoni et al. 1998)? This has led to investigations beyond the traditional approach of scrutinizing the mean response, seeking significance in the variance itself.

Sources of noise

From genetic processes to brain rhythms

Genetic and metabolic noise is a source of variability within the neuron, but its effect on neuron firing has barely been explored. Rather, researchers have focused on noise sources that act on faster time scales: in ion channels and pumps, which control ion flow across the plasma membrane (DeFelice 1981); at synapses, which mediate connections between neurons; in whole neurons, via the summed currents flowing through ion channels; in neural networks, where the noise is related to the activity of all neurons impinging on a given neuron; and in brain rhythms, generated by millions of neurons interacting across the large spatial scales. The dominant source is usually synaptic noise, i.e. noise coming from the activity of other neurons. Synaptic strengths also fluctuate because of the different availabilities of neurotransmitter and of components of various biochemical signaling pathways. Noise on longer time scales arises from the long-term modulation of neural activity by neuromodulators, such as serotonin. A recent review of neuronal noise, particularly for sensori-motor control, can be found in (Stein et al. 2005). Noise entered neuroscience many decades ago. An important focus of early experimental and modeling work has been ion channel noise. An excellent overview of the early literature on stochastic neural modeling can be found in Holden (1976) and Tuckwell (1989). A more recent analysis of stochastic neuronal dynamics can be found in Gerstner and Kistler (2002).

Thermal noise

Thermal noise, also known as Johnson-Nyquist noise, is intrinsic to any system operating above absolute zero temperature. The associated voltage fluctuations seen across a resistance are directly proportional to the absolute temperature. This source is rarely considered in neural systems, since it is weak in comparison to other sources. However, thermal noise reflects the molecular motion that drives other forms of noise such as conductance fluctuations of specialized transmembrane proteins known as ion channels. The properties of the resulting conductance noise in this case is discussed below. Further, the spectral properties of thermal noise can yield an estimate of the complex impedance of the neuron membrane, just like sine wave analysis can (Stevens, 1972). The thermal voltage noise has a power spectral density given by

PVV(f)=4kTRe[Z(f)]

where Z(f) is the complex impedance and k is Boltzmann’s constant. For a simple parallel RC model of membrane, this leads to

PVV(f)=4kTR1+4π2τ2mf2

where τ≡RC is the passive membrane time constant. The current noise has spectral density PII=4kTR. In both cases, we see that the power scales linearly with temperature.

Ionic conductance noise

Conductance fluctuations in ion channels are driven by thermal fluctuations, and in some sense, amplify these fluctuations. These protein channels are made up of subunits and complex domains that weave in and out of the cytoplasmic membrane, and undergo spontaneous thermally-driven changes in conformations between various open and closed states. The open state is characterized by a pore that allows specific types of ionic species to migrate through the membrane, under the influence of an electrochemical driving force. Such a force arises due to gradients in voltage and ionic concentration across the neural membrane. Molecular dynamics calculations explain the fundamental physical basis for these changes. Yet a more phenomenological kinetic description (see “Multiplicative Noise” below) is generally used to explain the transition rates between states. This description specifies how these rates are modified by the transmembrane potential, the concentration of various ionic species, and the presence of specific ligands such as neurotransmitters. In particular, the deterministic (i.e. noise-free) Hodgkin-Huxley kinetic formalism assumes that the number of channels is large enough that the fluctuations can be neglected. When the number of channels is not large, single channel fluctuations can be described by a Markov process and shown to lead to action potentials (Strassberg and DeFelice, 1993). For a simple two-state model of an ion channel, the power spectral density of the conductance follows a Lorentzian, i.e. it is flat at low frequencies and falls off as 1/f2 at high frequencies (Stevens 1972; DeFelice 1981).

Ion channel shot noise and Campbell’s theorem

During the time a channel is open, ions migrate in complex ways and varying amounts. The associated fluctuations are termed channel shot noise. This noise is to be distinguished from the shot noise used to describe random spiking times of neurons, which leads to random release times of neurotransmitter at synapses, and random currents in the postsynaptic cell. If the current caused by the movement of one ion across the membrane is measured as F(t), and the ions move independently from one another butexactly in the same manner, and their rate of entrance into the membrane is a Poisson process of rate r, then the shot noise current will have a power spectral density given by Pshot=r|F~(t)|2, where the tilde denotes the Fourier transform. Campbell’s theorem relates the mean and variance of a current to the rate of random arrivals of events and the shape of each individual current. It states that the mean current contributed by shot noise will be given by μI=r∫∞0F(t)dt. Likewise, the variance will be given by σI=r∫∞0F2(t)dt.

Ionic pump noise

This refers to the noise associated with the operation of ion pumps which use energy to separate ions across an electrochemical gradient (P. Laüger, 1991).

Synaptic release noise

Synaptic noise ultimately lies in the molecular events that follow the invasion of a synaptic bouton by an action potential. Chemical synapses are not deterministic switches that convert spikes into the release of fixed packets of neurotransmitter at synaptic boutons. Instead, they release transmitter probabilistically, and often at some low mean rate even without incoming spikes (see Koch 1999 for a review). The release probability depends on the history of firing of both the pre- and the postsynaptic neuron. Further, once a neuron fires, the action potential invades every location in the neuron, affecting receptors at its incoming synapses. There, the strength of the synapse can be modified by spike time-dependent plasticity (STDP), which uses correlations between pre-synaptic and post-synaptic firing on the millisecond time scale to alter synaptic strength on much longer time scales. Information flow is thus not unidirectional across synapses, and ongoing fluctuations in the strength of synaptic connections due to STDP and other time-dependent plasticity processes may also contribute noisy currents to a cell.

Synaptic bombardment

The main component of the noise experienced by a neuron originates in the myriad of synapses made by other cells onto it. Every spike arriving at this synapse contributes a random amount of charge to the cell due to the release noise discussed above. But even if each spike generated a fixed amount of charge in the postsynaptic cell, there would still be postsynaptic current fluctuations from the summation of all pre-synaptic spikes. The degree of irregularity of these fluctuations would be proportional to the temporal irregularity of the incoming spike trains, ranging from completely random Poisson inputs to periodic inputs. This synaptic noise is particularly strong in in vivo recordings, in which the cells receive their normal synaptic input. This noise further increases the mean conductance of the cell – and thus lowers its input resistance – because more ion channels are opened at a given time; this high-conductance state (see e.g. Ho and Destexhe 2000) contrasts with the low conductance state characteristic of in vitro recordings. The importance of the effect of the synaptic noise on the neural firing will depend in particular on whether the mean resting potential is close to the threshold for activation of the fast spiking current. If it is close, the fluctuations will strongly govern the firing times.

The polarity of the inputs associated with excitatory and inhibitory synapses leads to, respectively, positive and negative currents. These polarities are simply taken into account by the sign of the weight associated with the synapses (see Sections 3.2-3.4). The sum of such different synaptic processes, if they are uncorrelated, will have a mean equal to the sum of the individual means, and a variance equal to the sum of the individual variances.

Static connectivity noise

These time-dependent forms of noise are supplemented by a static form that arises from the inhomogeneity seen across cells belonging to a given class, and to the mean strength with which they connect. This randomness in connectivity patterns and cellular parameters can have important functional consequences for a network.

Slow neuromodulator noise

In between these time-dependent and static noises lies the action of neuromodulators, whose slow temporal fluctuations can, depending on the context, be seen as another source of noise.

Modeling neuronal noise

Density, correlation and coupling of the noise

Modeling the action of noise on the otherwise deterministic dynamics of a neuron involves techniques from stochastic dynamical systems (Longtin 2007). There are three main issues to contend with:

- What is the density of values that the noise variable can take? Is it binary, associated with discrete random trains of spikes? Does it have a smooth density, such as a Gaussian, as expected for continuous-time noise processes?

- What are the correlations between values of the noise at different times?

- How is the noise coupled to the deterministic equations? The answer to these questions depends on the noise source. Intrinsic noise sources such as thermal noise, conductance fluctuations and shot noise assume values from a continuous distribution, and are assumed to fluctuate on much faster time scales than any neuronal response time scale – and are thus often modeled as Gaussian white noise sources. Synaptic inputs are due to the spiking activity of other neurons, and since firing frequencies rarely exceed 1000 Hz, they are effectively a slower form of noise. But the combined action of tens of thousands of such inputs can produce fluctuations on a much faster time scale. The early models of neuronal noise likened the evolution of the membrane potential to a random walk (Gerstein and Mandelbrot 1964), and this legacy lives on.

Multiplicative conductance noise

This noise is the one referred to in Section 2.3 above as “ionic conductance noise”. Here we discuss its mathematical modeling as a multiplicative noise in the context of stochastic dynamical systems. For a single neuron, the time evolution of the membrane potential V can be quantitatively described by the deterministic Hodgkin-Huxley (HH) formalism (see (Koch 1999) for an introduction): CdVdt=Σkgkmkhk(Vk−V)+I(t) I(t) is an external bias signal, and C is the membrane capacitance. The sum is over the different ionic conductances gk (including conductances increased by a neurotransmitter ligand at a synapse) present in the membrane, Vk is the Nernst (reversal) potential for ion species k, and mk and hk are the activation and inactivation gating variables for species k. The HH equations are complex, usually four or more coupled highly nonlinear ordinary differential equations, and their behavior in the presence of noise sources is of interest. Conductance fluctuations will affect the gk‘s and gating variables (see e.g. DeFelice 1981), along with the regularity of firing and the reproducibility of the firing response to a given input. Noise thus makes g_k, m_k and h_k time-varying random variables, and the deterministic HH system becomes a stochastic dynamical system (Longtin, 2007). Different methodologies have recently been developed to deal with the proper simulation of these conductance fluctuations (see e.g. Fox and Lu 1994, Chow and White 1996, White et al. 2007). Conductance fluctuations usually are meant to include channel shot noise and thermal fluctuations in modeling studies. Because these variables multiply the voltage variable, they are a form of multiplicative noise, whose impact at a given time will depend on the voltage at that time. On the other hand, ionic pumps can be modeled to a good approximation as additive noise, e.g. as part of the input I(t) (Laüger 1991).

Modeling synaptic input and Stein’s model

Synaptic inputs cause abrupt changes in their associated synaptic conductance gsyn each time a spike invades the pre-synaptic bouton. An idealization of this process is to consider that trains of such spikes as trains of Dirac delta functions. These trains can be assumed to act directly on the current balance equation via their conductance, and thus also form multiplicative noise, albeit more of an impulsive character. Stein’s model describes the evolution of the membrane potential V of a given neuron in the presence of synaptic input (and absence of gating variables):

dVdt=−Vτm+I(t)C+Σi,jωiδ(t−tij)

where I(t) is an external input bias signal, ωi is the connection strength of presynaptic neuron i to the neuron of interest, and tij is the j-th firing time of neuron i.

Synaptic input can be decomposed into different subsets according to the different receptors they activate. For example, part of the synaptic input onto a cell may be excitatory, and the other part inhibitory (see also Figure 1). Assume all synaptic weights for the j-th type of synaptic input is ωj and that each type of synaptic input is Poisson (i.e. spike intervals are uncorrelated and exponentially distributed) with mean rate νj . The steady state mean of the voltage in response to the total synaptic input is then given by: ⟨V⟩=τmΣjωjνj and the variance by

⟨ΔV2⟩=τm2Σjω2jνj.

When the mean input is balanced, e.g. when the mean level of excitation is equal and opposite to the mean level of inhibition, the firings are highly dependent on the fluctuations in the input: this is referred to as the fluctuation-driven regime (see Salinas and Sejnowski 2001 and reference therein; Kuhn et al. 2004).

Diffusion limit of Synaptic Noise and Ornstein-Uhlenbeck process

In the limit where the strength of the weights ωi goes to zero and the frequency of incoming spikes goes to infinity, the sum of delta functions becomes Gaussian white noise. This is known as the diffusion limit of synaptic input (Capocelli and Ricciardi 1971; Lansky 1984). Further, if the battery term (Vsyn−V)that multiples the conductance gsyn remains relatively constant, the battery term can be replaced by a constant. Synaptic noise, in this diffusion and constant battery term limit, can essentially be approximated by additive Gaussian white noise ξ(t) . The resulting system reads:

τmdVdt=−V(t)+RI(t)+ξ(t),

which is also known as an Ornstein-Uhlenbeck (OU) process with correlation time τm (see Tuckwell 1988, 1989 for reviews).

Another more elaborate possibility is to consider that the synaptic noise itself produces a correlated noise in the form of an OU process. This is the case when one wishes to take the finite characteristic response time τsof a synapse into account. The synaptic current then satisfies (see e.g. Fourcaud and Brunel 2002):

τsdIsdt=−Is(t)+Σi=1NsωiΣkδ(t−tki)τm

where the δ‘s are the firing times of presynaptic neuron i contributing to the synaptic current Is, and Ns is the number of presynaptic neurons (assumed equal here to the number of synapses). The double sum can be approximated in the diffusion limit with inputs arriving at a rate of r per second as μ~+σ~η(t) where ηis a zero-mean Gaussian white noise, μ~=⟨Ji⟩Nsr, σ~2=⟨J2i⟩Nsr (these latter two expressions being a consequence of Campbell’s theorem) and the brackets are averages over the synapse population.

Mean firing rate in a noisy integrate-and-fire model

This approximation enables much analytical insight into the firing activity of the noisy neuron. One can calculate the density of the voltage variable using the Fokker-Planck equation associated with this Ornstein-Uhlenbeck process. However, a more realistic model of neural firing activity requires the calculation of the mean time taken to reach an absorbing boundary, namely the threshold for the fast current causing the upstroke of the action potential. This process must have a specified starting value, such as the reset voltage that follows an action potential. It is then possible to calculate the mean escape time to threshold, which can be compared to the mean interspike interval observed experimentally.

The resulting model is known as the leaky integrate-and-fire model with additive Gaussian white noise. In the case where the battery terms are allowed to vary, the model becomes the leaky integrate-and-fire model with multiplicative Gaussian white noise. Refinements to these models involve making the synaptic conductance an OU process, i.e. accounting for synaptic filtering (Brunel and Sergi 1998), or by improving on the contributions caused by the Poisson shot noise-character of the synaptic input (Richardson and Gerstner 2006).

Quadratic integrate-and-fire model

The leaky integrate-and-fire neuron has an artificial threshold. The quadratic integrate-and-fire model is a better representation of the firing behavior of so-called Type I neurons near threshold:

dVdt=β+V2

Its firing properties in the presence of noise such as the mean and variance of the first passage time and the coefficient of variation of the interspike interval can also be calculated (Lindner, Longtin and Bulsara 2003). Ongoing work focusses on the response of this and other models, such as the exponential integrate-and-fire model (Fourcaud-Trocme et al. 2003), to mixtures of deterministic and stochastic inputs. Other formalisms approximate these problems with more phenomenological descriptions of probabilistic firing rates, often with the goal of simply accounting for single cell noise in network activity (see Gerstner and Kistler 2002 for an excellent review). These escape rates can be made more or less steep functions of the mean of the input, the steepness being proportional to an inverse temperature parameter.

Chaos or noise?

Deterministic chaos can arise for certain parameter settings in single cell models, especially if they are driven with strong periodic input (Glass and Mackey, 1988). The resulting fluctuations must be distinguished from those produced by noise alone (see e.g. Longtin 1993 for a comparison of a deterministic and stochastic form of “random” phase locking). Chaos is particularly likely if the cell has intrinsic currents that cause bursting.

The trajectory of a chaotic system is reproducible from a given initial condition, which is not the case for a stochastic system (even though statistical properties such as moments may be constant in time). Trajectories of a system in a chaotic regime can even be predicted, although over a short time scale. The interest in chaotic properties was driven by the possibility of explaining the observed randomness of neural activity in terms of low-dimensional nonlinear dynamical systems. A complex high-dimensional system could have attractor states that are governed by a small number of variables such as modes. This picture applies to both single cell models as well as network models. In fact, it has been know that networks of interconnected excitatory and inhibitory neurons with fixed random connectivity can give rise to highly irregular spike trains – even in the absence of any noise (Hansel and Sompolinsky 1992; Nützel et al. 1994). It has also been shown that temporally irregular spiking patterns can arise from so-called balanced states that emerge naturally in large networks of sparsely connected excitatory and inhibitory neurons with strong synapses (Van Vreeswijk and Sompolinsky, 1996). The underlying dynamics are then strongly chaotic, i.e. have a high positive Lyapunov exponent.

The role of deterministic chaos in shaping neural responses and network functionality, in particular through its interactions with many of the aforementioned sources of noise, is an active area of investigation. Chaos brings with it notions of recurrence, where there is a finite possibility that a system will retrace its steps, i.e. that it will have similar futures for a while whenever it visits similar areas in phase space. It is particularly difficult to distinguish noise from chaos using spike train data, yet nonlinear time series methods are still being developed to improved such distinctions.

From a modeling perspective, the existence of chaos brings with it the possibility of bifurcations, i.e. of sudden changes in dynamical behaviour following a change in system parameters. The observation of such changes in an experimental setting, and in particularly, of one of the few universal routes to chaos via parameter changes, combined with modeling of these sudden changes, provides the most convincing argument in favor of the existence of chaos in a given system.

Effect of noise on firing and coding

Gain: superthreshold vs subthreshold

The main effect of noise is to introduce variability in the firing pattern if the neuron is firing regularly without noise, i.e. if it is superthreshold. Under periodic stimulation, the deterministic neuron may exhibit phase locking; such lockings will be disrupted in the presence of noise, making for a smoother response.

In the subthreshold regime where the cell is quiescent, noise will cause firings. In other words, noise turns the neuron into a stochastic oscillator. The firing rate versus input, i.e. the “f-I curve”, of the neuron will thus see its threshold smoothed out, and its gain (slope of the f-I curve) modified by noise.

Stochastic resonance

If the neuron receives subthreshold periodic input, noise can induce firings that are preferentially locked to the input (Gerstein and Mandelbrot 1964). A moderate amount of noise will in fact induce an output pattern that shows the strongest signature of the periodic input. This stochastic resonance effect in neurons (Longtin, 1993; Gammaitoni et al., 1998) relies on linearization of the threshold by noise, and at higher frequencies, on disruption of phase locking. Noise can also express more than one time scale in complex single neuronal dynamics, such as in noise-induced bursting (Longtin, 1997).

Noise correlations at the neural population level

It is important to also understand the correlations between the sources of noise affecting a neural population, or between the firing activity of neurons. Current work is in fact looking at how correlations propagate along and between neural networks (de la Rocha et al. 2007). There are state-of-the-art studies that incorporate noise into networks of spiking neurons to understand patterns of network activity, which further account for the co-variation in time of the noise strength with the firing rate (see e.g. Brunel and Hakim 1999). It is hard to resist the temptation to average over the noise in neuronal systems to obtain analytical solutions, especially by invoking mean field arguments in which the noise vanishes in the limit of an infinitely large number of cells. Such averaging is allowed when the fluctuations are indeed uncorrelated. But this can not be the case when e.g. network activity deviates from asynchronous behavior, or when neurons share inputs (Salinas and Sejnowski, 2001; Series, Latham and Pouget 2004). Ma et al. (2006) have also shown that Poisson noise may be special because it allows encoded quantities to be combined using appropriate probabilistic rules.

Whatever the sources of noise, it is natural to think that some of them may have been put to good use in the evolutionary process. This intriguing possibility is vigorously being explored.